AI art is a revolution, not just a trendy term anymore. For both experts and amateur creators, stable diffusion has become a leading option among the plethora of AI tools. In this article, we will explain what makes Stable Diffusion unique, how to utilize it efficiently, and which alternatives might be a better fit for you.

Part 1: Understanding Stable Diffusion

First and foremost, it is crucial to comprehend the fundamentals of stable diffusion ai if you are new to AI image generation. Additionally, let us examine its features, price, and optimal applications.

What is Stable Diffusion?

An open-source AI image generator called stable diffusion models uses language inputs to produce intricate graphics. Deep learning models that have been trained on large image datasets are used. Developers, designers, and artists use it to produce concept art, illustrations, and photo-realistic graphics swiftly. It can operate locally or on compatible platforms.

Pricing of Stable Diffusion

The stable diffusion online provides adjustable prices to suit various user requirements. The Basic package, which includes 13,000 image production, costs $27 per month. 40,000 generations and API access are included in the $47/month Standard plan. For more experienced users, the $147/month Premium package offers unlimited video creation, API calls, and all editing capabilities.

Key Features of Stable Diffusion

- The stable diffusion image generator uses strong, pre-trained AI models to transform intricate text prompts into imaginative, superior visuals in a matter of seconds.

- Modify already-existing photos or go beyond limits with visuals while keeping a consistent aesthetic.

- Custom-trained models or LoRA files can be easily integrated to adjust the AI's topic matter or artistic style by stable diffusion download.

- Use sophisticated prompt control to improve output precision and get rid of undesirable characteristics like distortion or blurriness.

Applications of Stable Diffusion

- Produce beautiful concept art, illustrations, and anime-style artwork for commissions or creative projects with little work.

- Use highly customized AI visualizations from stable diffusion forge to quickly visualize concepts for home décor, product packaging, or apparel lines.

- Make captivating images for blogs, presentations, social media postings, and advertisements without paying a designer.

- Create aesthetically pleasing and high-quality characters, settings, and scenes for video games, comics, or animations.

- To support architectural presentations and planning procedures, render the exteriors, interiors, or full cityscapes of buildings.

Easy Stable Diffusion AI alternative

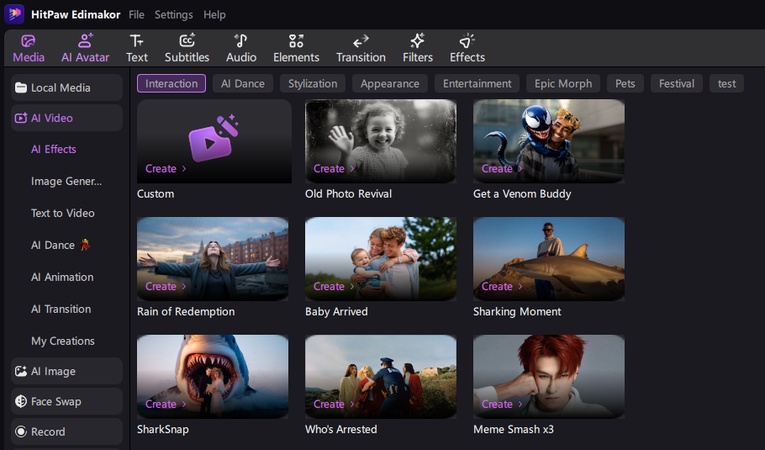

HitPaw Edimakor is a perfect alternative to Stable Difussion webui, here are the steps to use it:

Step 1: Open HitPaw Edimakor (Video Editor)

From the main dashboard, launch HitPaw Edimakor to utilise its AI powers. Install it and begin producing AI-generated images in a matter of minutes.

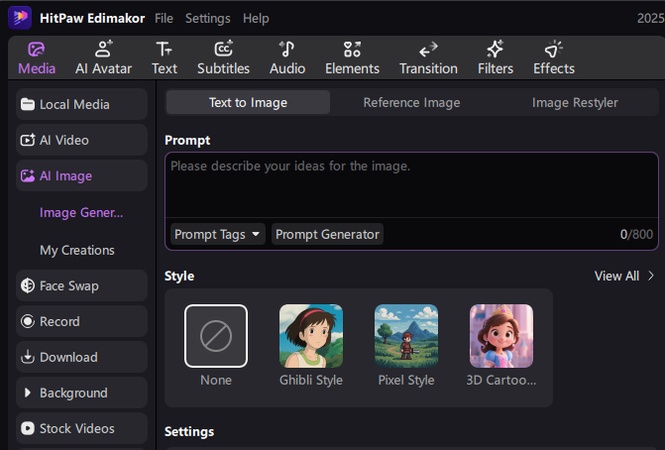

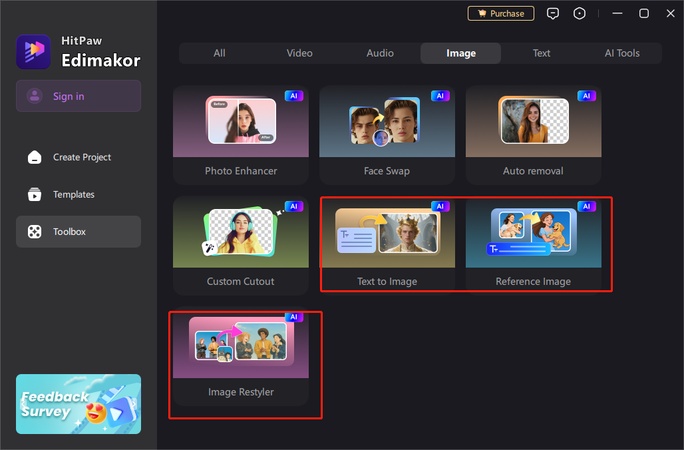

Step 2: Use AI Image Feature

-

From the toolbox, select the Text to Image tool. Write a thorough description of the scene you want in your prompt. Pick a style and an aspect ratio. To create the image, click the Generate button.

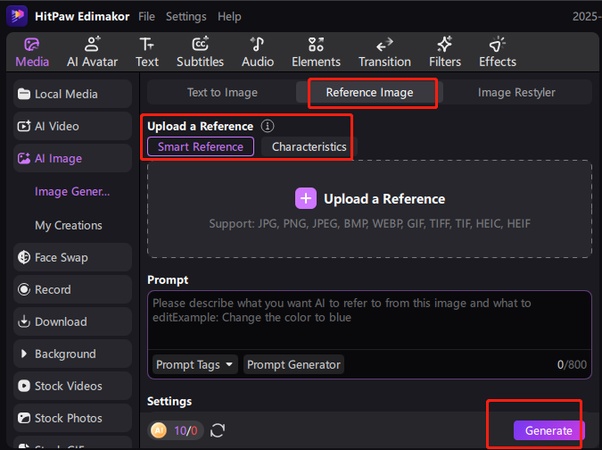

-

Click on the Image with Reference while you are still in the AI Image area. Put in a reference image. Next, type a prompt and click Generate.

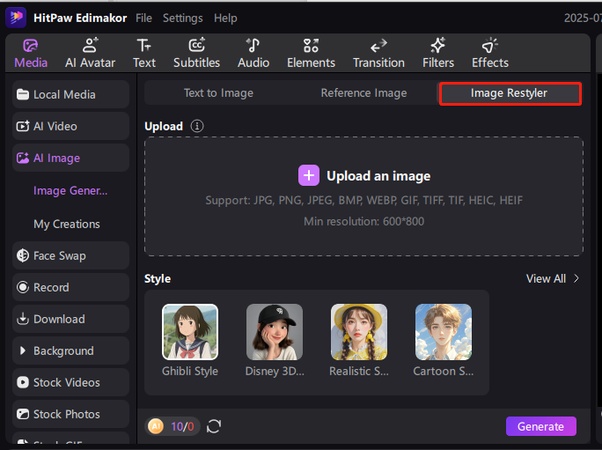

-

Select the tab for Image Restyler. To add a fresh appearance, upload any picture you like. After choosing a preset style, click Generate.

Step 3: Use AI Video Feature

-

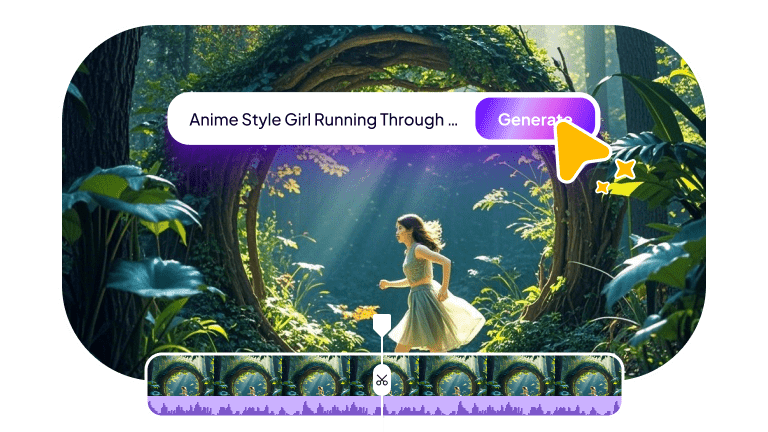

Navigate to Edimakor's AI Video section. Select the tab for Text to Video. Choose the video's time and aspect ratio, and write a thorough video prompt. To allow the AI to produce your video, click Generate.

-

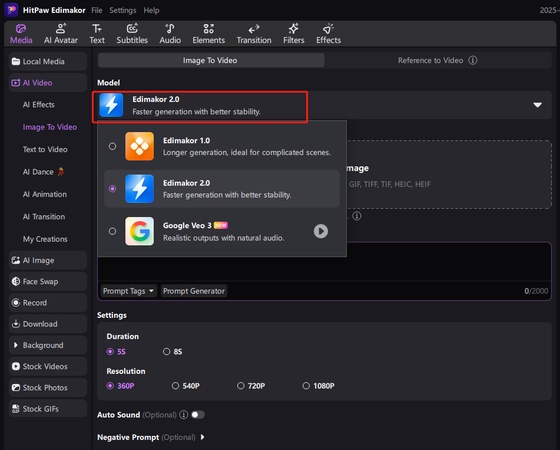

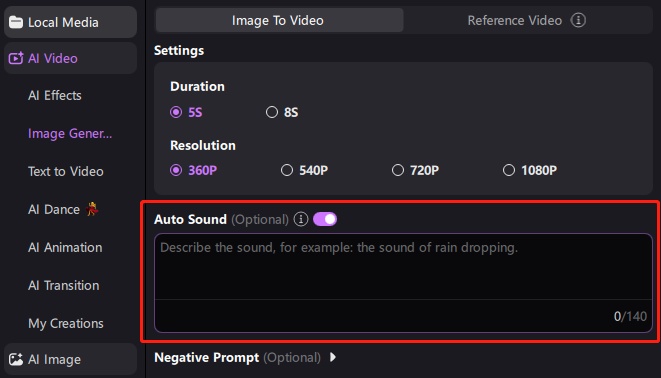

Select Image to Video in the same AI Video section. Upload a picture of an illustration, character, or landscape. After writing the prompt and choosing the video's length and quality, click Generate.

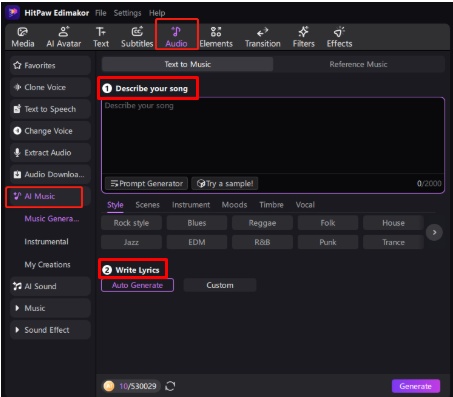

Step 4: Use AI Music Feature

-

Choose AI Music from the Audio tab and open the Text to Music tool. Enter text prompt, select genre, then click Generate to create music.

-

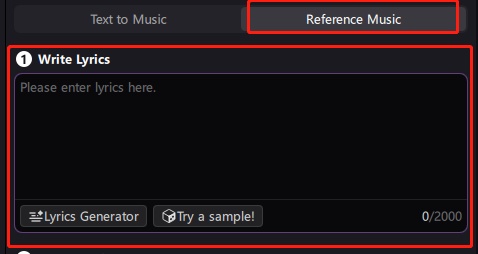

Select Reference Music, upload an audio clip, pick a mood or genre, then click Generate to create a similar track.

Part 2: Mastering AI Art: A Creator’s Guide to Stable Diffusion

Once you understand the fundamentals, stable diffusion free true potential becomes apparent when you use it in more complex ways. You will discover how to set up and optimise your AI art trip in the next part.

1 Why Creators Are Embracing Stable Diffusion

- Because Stable Diffusion gives artists total prompt control, they may create original, customised images without using pre-made templates.

- It is free to use, alter, and distribute because it is open-source, making it perfect for producers who desire personalisation without incurring significant expenses.

- Free models, advice, challenges, and criticism are provided by a thriving international community, enabling creators to develop, work together, and remain inspired.

2 Setting Up Your Stable Diffusion Environment

Step 1: Set up Git, Python, and Developer Accounts

Installing Python from the official website. To confirm the version, run Python in the command prompt. After that, install Git and, if you are not familiar with it, follow a basic tutorial. Create accounts on Hugging Face and GitHub after configuring these tools. You can obtain the Stable Diffusion model files from Hugging Face, and you can access the web UI code on GitHub.

Step 2: Download the model and clone the Stable Diffusion web user interface.

After installing Git, use Git Bash, use the cd command to find a folder, and then execute the Stable Diffusion Web UI repository clone command. All required files will be downloaded to your computer as a result. After that, sign in to Hugging Face, look for and download the Stable Diffusion model file you wish to use.

Step 3: Configure and Start Stable Diffusion Locally

Run the setup script after using Command Prompt and using Cd to get to the Stable Diffusion Web UI folder. This will install all necessary components and immediately establish a virtual environment. A local web address will show up in your terminal after installation; click this URL in your browser. You may now start creating AI graphics straight from your computer by entering prompts.

3 Prompt Engineering: Crafting Effective Text Prompts

The skill of crafting precise, evocative input language to direct AI models such as stable diffusion app in producing exact visual outputs is known as prompt engineering. To influence the image outcomes, it entails selecting the appropriate keywords, styles, modifiers, and even camera angles or lighting cues.

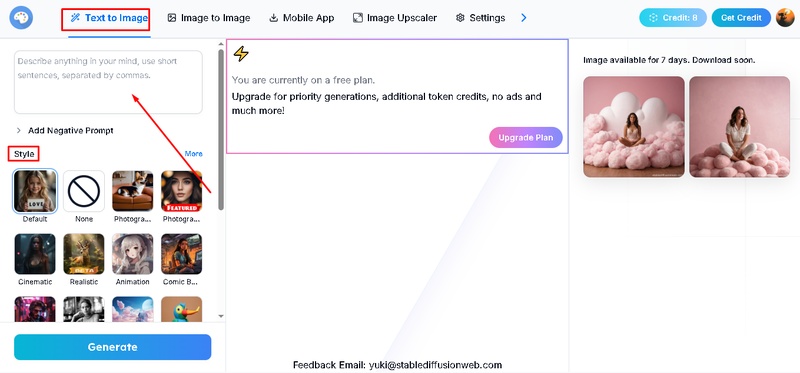

4 Step by Step to Use Stable Diffusion

- Access Stable Diffusion Online

- Select the Mode

- Add Resources and Choose Style

- Export the Final Image

Go to a Stable Diffusion platform like Leonardo AI, Clipdrop, Mage.space, or any other online version available.

Choose between available modes such as Text to Image, Image to Image, or Inpainting, depending on what you want to generate.

If using Text to Image, type your prompt and pick a style. If using Image to Image, upload your image, then add a prompt to guide the transformation.

Once satisfied with the preview, hit Download to save the AI-generated image to your device.

5 Showcasing Stable Diffusion Art

A crucial component of developing as a Stable Diffusion artist is sharing your AI-generated works. Popular platforms for showcasing art, acquiring followers, and getting feedback include DeviantArt, Instagram, ArtStation, and Twitter. In order to demonstrate their abilities and maintain inspiration, many creators also take part in themed challenges on Reddit and Discord forums.

Part 3: Community and Resources for Stable Diffusion Artists

Stable Diffusion has spawned a sizable community of AI enthusiasts, developers, and digital artists, and they are constantly exchanging resources, guides, and assistance. A robust resource network can boost your productivity, whether you are making bespoke models or beautiful pictures.

List Some Community And Resources For Stable Diffusion

- Discord servers such as stable diffusion art and the Reddit site r/StableDiffusion provide daily talks, display artwork, and respond to queries from novices to experts. These systems encourage teamwork, involvement in challenges, and rapid troubleshooting.

- Open-source codebases such as the original Stable Diffusion repository on GitHub or APIs like Banana.dev, RunDiffusion, and Stability AI are available to developers. These tools facilitate automation workflows, model training, and backend integration.

- Everything from prompt creating to inpainting techniques is broken out by Medium blogs and YouTube channels such as PromptHero and MattVidPro AI. In the meantime, curated guides are offered by sites.

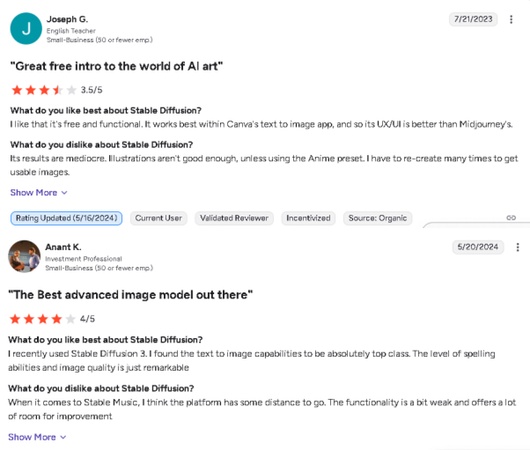

Some Reviews From Community And Resources For Stable Diffusion

Gain valuable insights and discover cutting-edge tools for Stable Diffusion by exploring community reviews and shared resources, maximizing your creative output.

Part 4: The Economic and Creative Impact of Stable Diffusion

Through the democratisation of image generation, stable diffusion android has revolutionized the creative and commercial landscape. It has lowered production costs, made it possible for artists and freelancers, and opened up new revenue sources in a variety of sectors.

1 Reasonably priced content production

Studio shootings and costly software are no longer necessary because to stable diffusion. This increases productivity and accessibility to design tools while enabling marketers, freelancers, and small enterprises to compete in visual branding without having to make significant financial investments.

2 Increasing the Economy of Freelancing

AI-generated artwork, personalised avatars, concept designs, and illustrations are now available from freelancers. Stable Diffusion services are used by platforms such as Fiverr and Upwork to list thousands of gigs.

3 Promoting Innovation in the Creative Sector

The stable diffusion ai free speeds up ideation and design processes in everything from fashion prototypes to movie pre-visualisation. Instantaneous concept visualisation, rapid iteration, and enhanced collaboration are all possible for creative teams.

Part 5: Comparing Stable Diffusion with Other AI Models

Stable Diffusion is notable for its offline functionality and open-source adaptability. But models like Leonardo AI, DALL·E 3, and Midjourney are stable diffusion alternatives and have special advantages in terms of accessibility, realism, and creativity.

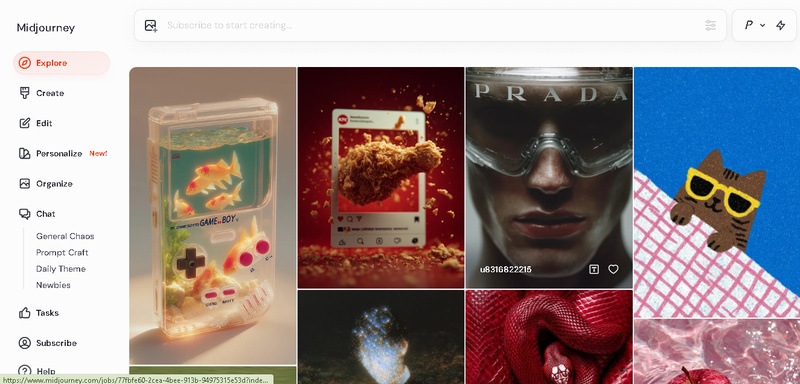

Midjourney

Key Differences from SD:

With no local install and an emphasis on creative, stylish outputs, it uses Discord-based prompts as opposed to SD’s offline customising options.

Ideal for:

Artists looking for inventive illustrations, concept sketches, and dreamy, beautiful images for fantasy projects, social media posts, or branding.

DALL-E 3 (by OpenAI)

Key Differences from SD:

Lacks user-side customisation like SD, but it stresses realism and context understanding and is integrated with ChatGPT for conversational prompting.

Ideal for:

Users who desire context-aware, incredibly detailed images that are produced straight from text with little technical setup or modification.

Adobe Firefly

Key Differences from SD:

Centred on producing commercially safe results using the editing tools, text effects, and Adobe integration included in Creative Cloud applications.

Ideal for:

Designers require smooth workflows in Photoshop or Illustrator environments and content that is protected by copyright.

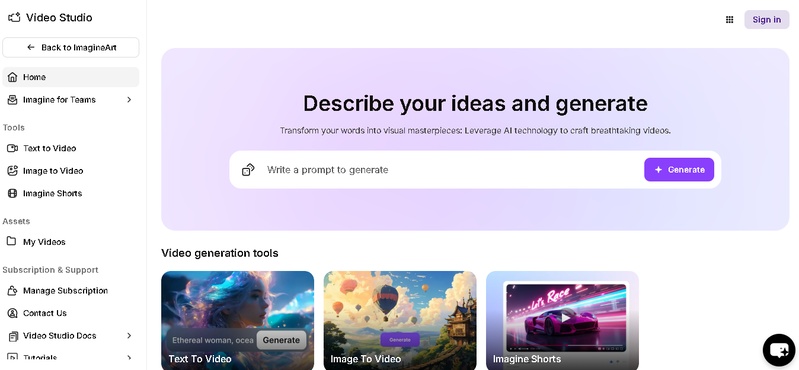

Leonardo AI

Key Differences from SD:

Provides refined models for characters, UI design, and gaming assets with an easy-to-use interface and pre-made templates.

Ideal for:

Digital artists and game developers produce characters, UI components, or quantities of consistent assets for commercial ventures.

RunwayML

Key Differences from SD:

Focuses on creative video tools, green screen removal, and AI video generating instead of just creating images.

Ideal for:

Using user-friendly web tools, content producers or editors can easily produce AI-generated video, motion graphics, and interactive media.

FAQs on Stable Diffusion AI Image Generator

A1. Yes, a number of mobile apps from third parties take advantage of stable diffusion technology. Apps like Diffus and Draw Things bring their AI art generation to mobile, but they’re limited compared to desktop versions.

A2. Absolutely. Urban layouts, interior design, and architectural concepts can all be visualized with the aid of stable diffusion. Architects and designers can produce concept images rapidly with the help of appropriate models and well-crafted prompts.

A3. While specific extensions, such as SD Upscaler or Real-ESRGAN plugins, improve image resolution, Stable Diffusion is not primarily an upscaler. While it performs well, dedicated upscalers like Topaz Gigapixel AI often produce better results for photo-realistic upscaling tasks.

A4. Blurry, extra limbs, bad quality, poorly drawn, disfigured, and watermark are typical examples.

Wrapping Up

The stable diffusion continues to be a revolutionary force in the field of AI art. Its community guarantees that it develops quickly, and its open-source nature offers developers unparalleled flexibility. However, HitPaw Edimakor (Video Editor) is the best option for anyone looking for a more straightforward, more approachable solution because it combines voice, music, video, and images into a single intelligent interface.

Leave a Comment

Create your review for HitPaw articles